Prediction of Patient Readmission in Intensive

Care Unit Using Machine Learning Techniques

Project Challenges:

Project Overview:

Hila Delouya

Advisor: Dr. Yehudit Aperstein

Client: Beilinson Intensive Care Unit

Industrial Engineering

The Model

Patients readmitted to an ICU during the same

hospitalization have an increased risk of death, length of stay

and higher costs. The model can support the decision-

making process used when discharging patients from the

intensive care unit at Belinson Hospital.

Imbalanced dataset (6% readmission rate)

Large portion of missing values (14%)

High correlation between the features

Very little positive instances to learn from (271 patients)

Inputs

Laboratory tests

& Bedside

monitors

Data

Preprocessing

Balancing data &

Dealing with

missing values

Classification

Ensemble

models

Output

Readmission

Probability

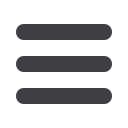

7,503 ICU Admission

6,095 Patients

4,357 patient with

ICU LOS ≥24h,

discharged alive

from the ICU

271 (6%)

readmitted

within 7

days of ICU

discharge

4,086 patients

discharged alive

after one ICU stay

1,738 Patients

excluded: 658 died

in the ICU, 147 died

in the following 14

days, 933 with ICU

stay < 24 hours.

Fig. 1

patient flowchart

Preprocessing

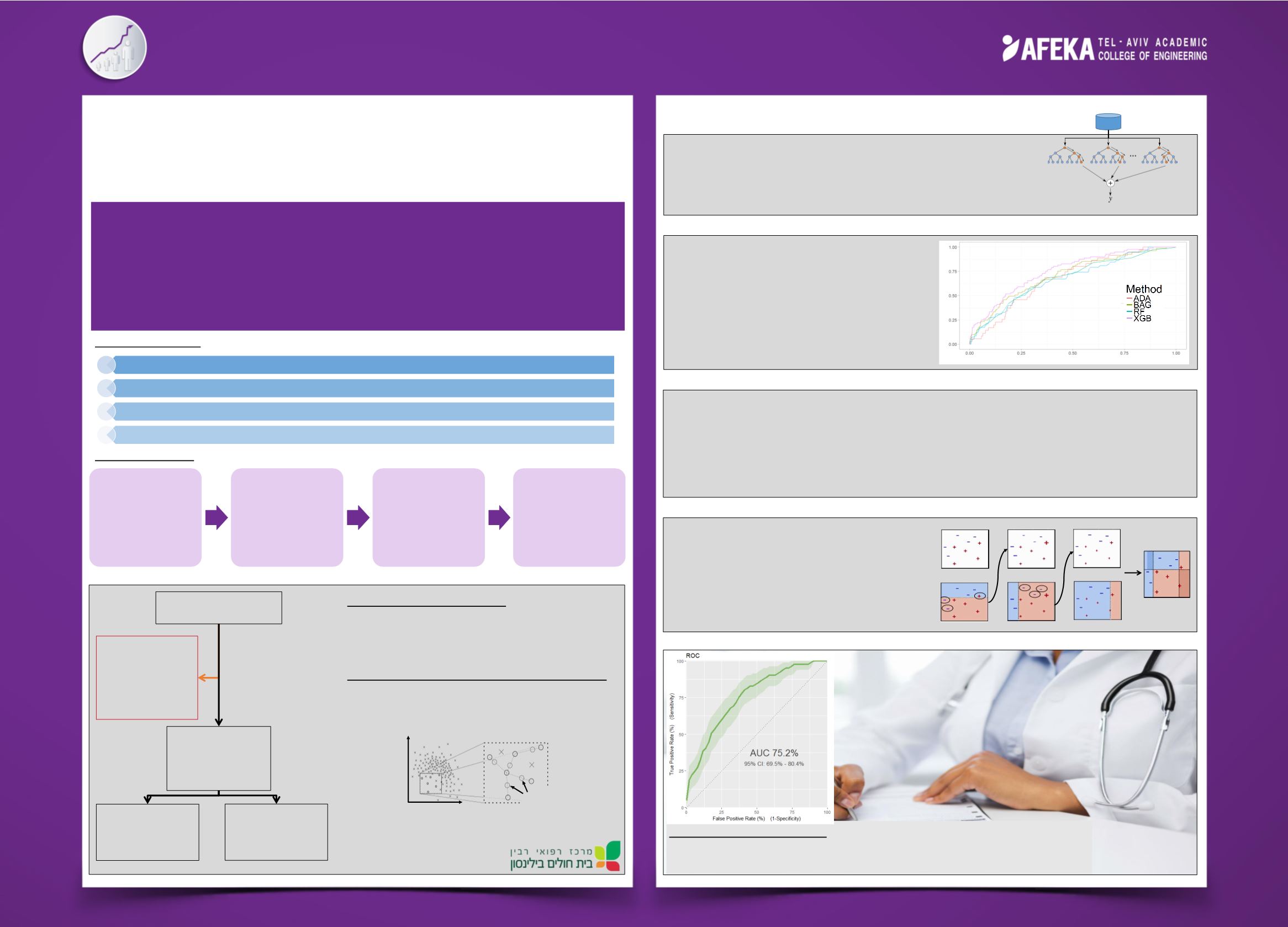

Fig. 2

SMOTE example

Splitting the data to 70% train and 30% test.

Balancing the train set (1:1) with SMOTE sampling.

Applying ensemble model XGboost based on decision trees.

Validation- 10 fold cross validation with 10 repeats.

Dealing with missing values:

1. Imputation (KNN & other) 3. Binarization

2. Discretization

4. Smoothing

Synthetic Minority Over-sampling Technique:

Undersampling the majority class by 135%.

Oversampling the minority class by 300% (K = 8).

Extreme Gradient Boosting (XGboost) is an

optimized distributed gradient boosting system

that builds additive tree models.

Model stacking (GBM or linear regression).

Bagging of similar non-readmitted patients (k bags).

Asymmetric adaboost (assigning higher initial weights for the positive instances).

Feature selection by correlation threshold.

Clustering by patient similarity and creating K different ensemble models.

1. XGboost AUC - 75.2%

2. Adaboost AUC - 70.8%

3. Random forest AUC - 67.1%

4. Adabag AUC - 66%

5. Bagging AUC - 65.18%

DB

Fig. 3

ensemble model

Ensemble Models

Other Implemented Methods

The best classifier - XGboost

Fig. 4

boosting example

Results

Results of previous research:

1. Ouanes, 2012 : AUC - 0.74 ± 0.06 3. Afonso 2013 : AUC - 0.68 ± 0.03

2. Vieira, 2013 : AUC - 0.72 ± 0.04 4. Fialho 2013 : AUC - 0.64 ± 0.10

The model improves

the doctor’s decision by

50%

Synthetic

instances

Combined classifier

Trained classifier

Update weights, D2

Trained classifier

Update weights, D3

Original data set, D1

Trained classifier

False Positive Rate (1-Specificity)

True Positive Rate (Sensitivity)